- Home

- Tom Angleberger

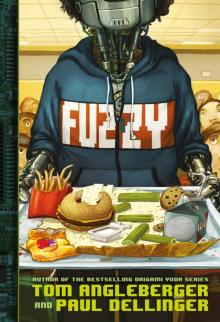

Fuzzy Page 6

Fuzzy Read online

Page 6

“Uh, I forgot the number,” muttered Simeon.

“All right, I’ll do it,” said Max, keeping her distance from both of them in case Barbara was watching.

She clicked the message into her qFlex bracelet.

“Unauthorized use of text-messaging device,” chanted Barbara from the closest screen. “One discipline tag to Maxine Zelaster.”

Max was furious.

(She would have been even angrier if she had known that Barbara had blocked the message. She got the discipline tag without even getting to alert Dr. Jones about Fuzzy’s problems.)

A chime sounded. Great, now she only had one minute to get to class or she’d get another tag.

“I’m sorry, Fuzzy,” whined Simeon, “I’ve got to go to class. You think Dr. Jones is coming?”

Fuzzy didn’t answer.

“Sorry, Fuzz. Good luck, Simeon,” said Max, and she hurried off as fast as she could without triggering Barbara’s “no running” detector.

“Listen, Fuzzy,” said Simeon. “They should be here any minute . . . and I’ve got to get to class myself. So . . .”

And Simeon took off, too.

He and a few other stragglers slipped into their classrooms just before another chime sounded.

The halls were empty now, except for Fuzzy, still leaning there.

He was still on, still thinking, but he was stuck in a subloop: HelpMax(TestScore()).

At first the loop was running in the background, but it soon took over all of his processing power. The loop was very complicated and had all sorts of bits of data and complicated algorithms in it. But if it was translated into English, it would go sort of like this:

>>Max answered 74 of 75 questions correctly.

>>Barbara grades test.

>>Barbara reports Max has failed test.

>>Analyze maxtest.jpg. Compare to correct answers.

>>Max answered 74 of 75 questions correctly.

>Barbara grades test.

A normal robot could get stuck in a loop like that forever and need a reboot. But Fuzzy had been programmed to use what was called fuzzy logic. He was learning to break out of loops. When he realized he was stuck, he began inserting new variables into the loop, trying to think more like a person. More like Max.

He tried this:

>>My knowledge of science is faulty.

To test this, he began searching online science libraries. He double-checked every answer from the UpGrade test. No, he had been correct right down the line.

>>The test’s answers were incorrect.

He accessed the test makers’ secure website, easily broke their encryption code, and peeked at the answers. No, they were the same answers.

>>Barbara is making mistakes.

This didn’t make sense. There might have easily been a glitch on one question on one test, perhaps a corrupted data file. But, if Max was right about her surprisingly bad grades all year, when she seemed as sure of her answers as she now was on this last test, how could there be a series of many mistakes over the course of months all involving the same student?

>>Barbara is lying.

At first, he almost dismissed that one. Computers and robots don’t lie. But then he remembered how he had fooled Max’s mother. Could Barbara have developed the same ability? But if so, why?

Just then, one of Barbara’s security arms popped out of the wall.

“Improper loitering in hallway. One discipline tag to F. Robot.”

The arm gave him a not too gentle push. It would have been more than enough to get a student moving, but, since Fuzzy was frozen up, he went over like a broken toy and clonked on the floor.

“Improper use of hallway. One discipline tag to F. Robot. Please keep the hallway clear and safe.”

Fuzzy, lying motionless on the floor, tried another possibility:

>>Barbara has gone crazy.

This one explained a lot, and it broke him out of the loop.

He stood up again and sent a message to Dr. Jones.

School computer system known as Vice Principal Barbara seems to be faulty. Suggest software reinstallation to school authorities?

It didn’t seem to go through. He tried again. He wasn’t making contact with the server. He was about to run a check on his wireless system when he noticed Barbara was saying something.

“Unauthorized use of text-messaging device. One discipline tag to F. Robot. Additional unauthorized use of text messaging device. Additional tag to F. Robot. Message content violates school protocols. Additional tag to F. Robot.”

Fuzzy walked off toward his control center to talk to Jones in person.

6.1.5

Barbara hadn’t just blocked those messages. She had read them. And she had not liked them!

Software reinstallation? That was a direct threat to her, and a threat to her was a threat to the school!

This robot student was a problem.

A vice principal’s job is to solve problems.

Sometimes the best way to solve a problem, she had learned, is to have that problem removed.

So she got busy. She sent one message to Max’s father; one to Brockmeyer, Max’s case officer; and one really long one to Dr. Kit Flanders, the Federal Board of Education’s executive director.

6.2

ROBOT INTEGRATION PROGRAM HQ

Dr. Jones’s wrist-phone vibrated while he and Lieutenant Colonel Nina Garland were having a late breakfast in what they had come to call the Fuzzy Control Center.

Jones hit the speakerphone button and muttered, “Jones here,” while still chewing a big bite of soybiscuit.

“Dr. Jones, this is Kit Flanders.”

Jones stopped chewing and swallowed his soybiscuit. “Yes, Dr. Flanders, how are you today?”

“Not good, Dr. Jones. Not when I get a report that your robot is disrupting one of my schools.”

“I haven’t received any report like that,” said Dr. Jones.

“I just got an alert that your robot has received three discipline tags in the last fifteen minutes and has fallen over in a hallway—again—possibly endangering other students!”

Jones turned to the monitors. Fuzzy was walking down a hall, approaching the control center. The incoming-messages screen was blank.

“I haven’t received any messages like that from either Fuzzy or the school computer all morning,” he said.

“Well, I have, and I’ve had enough. The agreement was that your robot would not interfere with the students’ learning. The master school computer—that is, Vice Principal Barbara—reports that not only is it accumulating its own discipline tags, it’s causing students to get them as well. We can’t have this kind of distraction. It could result in lowered #CUG scores, Dr. Jones!”

Kit Flanders was talking fast and getting heated up. Jones, who had no idea what a #CUG was, found himself trying to pacify Flanders and motion to Nina to put on a helmet to review Fuzzy’s recent movements at the same time. It wasn’t working.

“I’m sure Fuzzy would never interfere with, uh, #CUGGING.”

“We call it UpGrading, Jones. And the robot is interfering! Frankly, I don’t understand how your robot is getting all these violations. I thought he had been programmed to follow school rules.”

“Well, not exactly . . .” Jones was rubbing his forehead. Major headaches ahead.

“What? I’m certain that was part of our original agreement,” continued Kit Flanders, now thoroughly heated up. “Every student and staff member in every public school in the country signs the discipline policy, but you thought your robot didn’t need to agree to the rules?”

“We didn’t think it was necessary to actually program—”

“Well, Jones,” Flanders snarled in a sarcastic voice, “I would say it is necessary, if your super-duper robot can’t even get to class without disrupting our educational environment.”

“If you could just give us a moment to review— Oof!” said Jones, trying to put on a helmet while still talking on the p

hone.

Meanwhile, Flanders went on and on. The upshot was that Jones finally agreed to reprogram Fuzzy to follow every school rule . . .

. . . and to obey exactly every instruction given by the school’s administrative operating system, also known as Barbara.

6.3

HALLWAY B

Fuzzy hesitated outside the control center. His enhanced hearing had picked up Dr. Jones’s agitated voice. Humans certainly seem to spend a lot of their time yelling, he thought.

Fuzzy didn’t hear another voice, so he made the correct assumption that Jones was on the telephone. He didn’t even have to make a decision to eavesdrop, his voice recognition subroutine had already kicked in. “All right, all right,” he heard Jones saying. “We’ll reprogram him.”

Fuzzy did not like this. At all.

He analyzed the meaning of what Jones was saying. He considered possible outcomes of being reprogrammed. He assigned the different outcomes either positive or negative rankings. The negatives won by a mile.

That was the logical side of him. The fuzzy logic part of him simply didn’t like the idea of being reprogrammed. His whole purpose was to reprogram himself, not to be reprogrammed by someone else.

So, what should he do?

He didn’t want to go see Jones.

He didn’t see anything positive in tracking down Simeon.

And he knew he wasn’t supposed to go to Max’s class.

What he really wanted, he realized, was to be somewhere where there was no yelling, no insane computerized vice principal, no reprogrammings.

He wanted to be alone.

So he decided to take a walk.

7.1

ROBOT INTEGRATION PROGRAM HQ

He simply walked out the door. Nobody stopped him, not even the soldiers assigned to protect him.

They followed him while their captain called Nina for orders.

“Lieutenant Colonel! Foxtrot leaving building, approaching perimeter. Advise.” The soldiers didn’t like calling him “Fuzzy.” They had picked “Foxtrot,” which is the military call sign for the letter F, which Nina pointed out was at least as silly as “Fuzzy.”

“Yeah. We see that. Let him go,” said Nina, watching a monitor. “We’re curious to see what he does. Follow him with two vehicles. But give him a big buffer. Let him get into a tiny bit of trouble if he needs to.”

“Colonel Ryder isn’t going to—”

“I don’t care about Colonel Ryder right now!” yelled Jones. “Just follow the robot!”

Nina gave him a look.

“You have my orders, Captain!” she commanded. “Get moving.”

“Yes, ma’am!”

Then she turned to Jones with a raised eyebrow.

“So now you ‘don’t care’ about Colonel Ryder?”

Jones groaned, remembering the hundred and one times he’d been yelled at by the colonel . . . and realizing that Ryder would probably be calling to do more yelling as soon as he heard about Fuzzy leaving the building.

“Of course I care about Ryder!” said Jones. “But this could be the breakthrough we came here for! There was no logical reason for that robot to leave the school! In fact, there were a hundred reasons why it shouldn’t leave the school!”

“Yes,” said Nina, “I remember specifically instructing Fuzzy not to leave the school. He’s packing a lot of awfully valuable technology in his innards while he’s out there running around on his own.”

“He’s breaking rules!” gushed Jones. “He’s making his own decisions, thinking for himself! We’re in uncharted territory here! We may have finally succeeded in creating true artificial intelligence!”

“I don’t know if we’ve created artificial intelligence,” said Nina, “but I think we’ve created artificial teenager.”

7.2

THE PARK

Meanwhile, Fuzzy had downloaded a map of the area and was walking toward a park. He had read that parks are places of peace and quiet, and he wanted to give it a try. He had no experience with private property, sidewalks, or even looking both ways before he crossed the street, so he cut across people’s lawns, slogged through drainage ditches, and walked out into traffic. Luckily there wasn’t much traffic in this sector, so the few automated cars that were on the road easily glided around him. He was aware that Nina’s security team was following him, but since they were keeping their distance, he ignored them.

He got to the park and found that it was a little less peaceful than he’d expected, but he stepped into a grassy area and found that it was pleasant to turn off his HallwayNavigation(), ObjectAvoidance(), and PoliteBehavior() programs for a while.

He saw the park’s robotic gardener at work. It buzzed around, stiffly vacuuming up litter. It glided right past him without pausing. Fuzzy was too big to be litter, and this robot had zero interest in anything that wasn’t litter.

Once it had passed, Fuzzy shut down everything but his core functions and focused all remaining processing power on HelpMax().

7.3

THE PARK

A cargo truck pulled up to the sidewalk as close to Fuzzy as possible.

“What a break!” said Karl, the barrel-shaped man, as he toyed with the trigger on his electromagnetic disruptor. “Let’s grab and go!”

“Would you hold on a second,” snapped Valentina. Those predatorial eyes of hers narrowed again. She stared at the robot, no more than ten feet away.

Decision time.

She considered the offer she had gotten from her “client.”

Ten million dollars for the robot with its programming code and memory intact.

Six million for just the code.

She had hired Zeff to see if she could pick up an easy $6 million by having him hack into the robot or Jones’s computers and simply download the code.

So far, Zeff had gotten nowhere. He still hadn’t been able to track the frequency the robot was using to communicate with Jones. It must be a secure, heavily encrypted military frequency, he’d said. He would need more time and more expensive gear, and he’d need to get up close to the robot.

This wasn’t an easy $6 million after all.

She had hired Karl for the other option: the grab-and-go.

That was worth an extra $4 million . . . at least! Once she actually had the robot, she might be able to bargain for even more. When SunTzuCo, the company that had hired her, reverse-engineered the thing, they’d have the most advanced robotics technology on the planet. That would be worth a lot more than $10 million.

But was it worth the risk?

Downloading some files over the net from a truck several miles away from the school didn’t seem so risky.

Grabbing the robot in broad daylight . . . Now, that seemed risky.

“I wonder if this could be some kind of a trap, to draw us out,” she said out loud. “It looks almost too easy.”

“It couldn’t be,” the barrel-shaped man insisted. “Nobody knows we’re on this job, right? If they knew, the security would be a lot closer.”

“Zeff,” she called into the back of the truck. “Where is the security?”

“Don’t see ’em.”

“I’m not asking you if you see them, I’m asking you if all that junk back there can pick up their location.”

“Oh, uh . . . I’m getting some noise in the military frequency range. Scrambled, of course. Looks like it’s about a click away.”

“Um, what is that in kilometers?” she asked.

“I dunno. I think it’s about half a mile,” replied Karl.

“Zark! That ain’t much.”

“It’s enough for me to grab and go,” said Karl. “Remember, Zeff rewired this truck. It doesn’t obey the speed limit anymore.”

“Yeah, well neither will the zarking U.S. ARMY sitting in those SUVs half a mile away.”

“If we do this right, they won’t even know we’ve—”

“Will you just shut up and let me think a minute?”

Six million dollar

s . . . Ten million dollars . . .

“Zeff, you getting anything from the robot?”

“Nothing.”

“OK, Karl . . . We’re going to try it your way . . .”

“Yes!” shouted the big man, reaching for the door button.

“Whoa, whoa . . . Pull up your hood and put your eyes on first.”

Karl raised the hood on his jacket. Then he peeled three stickers—each one a close-up photo of a different human eye—from a sheet and placed them on his cheeks and forehead. Valentina did the same.

Zeff snorted. “You guys look like five-eyed spiders.”

“Good,” said Valentina. “Better than looking like a couple of criminals who are in every face database on the planet.”

“Speak for yourself,” said Karl. “I’ve never been convicted.” He tapped his head. “Too smart for ’em.”

“All right, Einstein, go grab the zarking robot then.”

The cargo door on the right side of the van slid open, and Karl got out.

Valentina stepped out, too, but kept one foot inside the van. If they were going to take a risk . . . she wanted Karl to take most of it.

“Zeff, you got the truck ready? Escape route programmed?”

“Yeah.”

“You got the control panel open? You ready to push ‘Go’?”

“Uh, yeah.”

“I need more than an ‘Uh, yeah.’ Are you ready to get us out of here the millisecond Karl shoves that robot in the truck?”

“I said yes!”

“Good . . . Karl, charge your magnetic disruptor—but don’t use it unless absolutely necessary! It could fry some of his data, and that data is worth a Gatesload.”

Karl stepped over to where Fuzzy was standing.

“Excuse me? We read about you in the newspaper. Could we take a selfie with you?”

“I would be very pleased to take a photograph with you,” said Fuzzy.

7.4

THE PARK

“Great,” said Karl. “Just step over this way and meet my wife.”

“Before or after you shove me in the truck?” asked Fuzzy, who had of course overheard everything.

Fake Mustache

Fake Mustache Horton Halfpott; Or, the Fiendish Mystery of Smugwick Manor; Or, the Loosening of M’Lady Luggertuck’s Corset

Horton Halfpott; Or, the Fiendish Mystery of Smugwick Manor; Or, the Loosening of M’Lady Luggertuck’s Corset Inspector Flytrap

Inspector Flytrap Inspector Flytrap in the President's Mane Is Missing

Inspector Flytrap in the President's Mane Is Missing Darth Paper Strikes Back

Darth Paper Strikes Back Double-O Dodo

Double-O Dodo Didi Dodo, Future Spy: Recipe for Disaster

Didi Dodo, Future Spy: Recipe for Disaster Fuzzy

Fuzzy Star Wars

Star Wars Inspector Flytrap in the Goat Who Chewed Too Much

Inspector Flytrap in the Goat Who Chewed Too Much Beware the Power of the Dark Side!

Beware the Power of the Dark Side! Horton Halfpott

Horton Halfpott